Kernel Approximation Machine Learning

To reduce the impact number of random features Le et al. This conference is the 1st international conference on kernel-based approximation methods in China.

Machine Learning Linear Regression Full Example Boston Housing R Bloggers Linear Regression Machine Learning Regression

So again from sklearnkernel_approximation we import the RBFsampler this time.

Kernel approximation machine learning. Specifically we show how to derive new kernel functions. Kernel methods offer an interpretable way to model nonlinear functions but they are difficult to scale due to the computational challenges. Choose a kernel defined by k with k01 take its Fourier transform pω which will be a pdf over Rd.

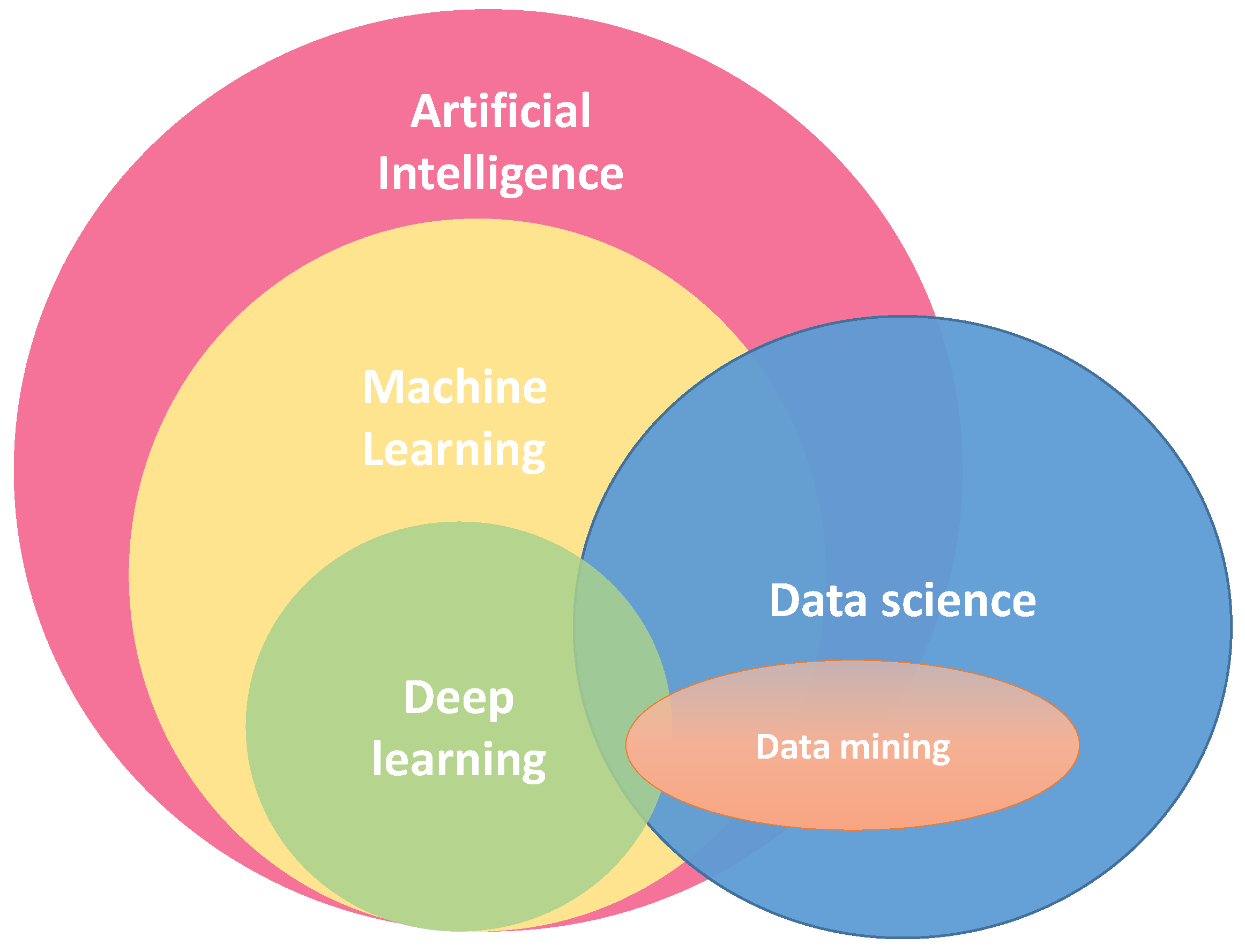

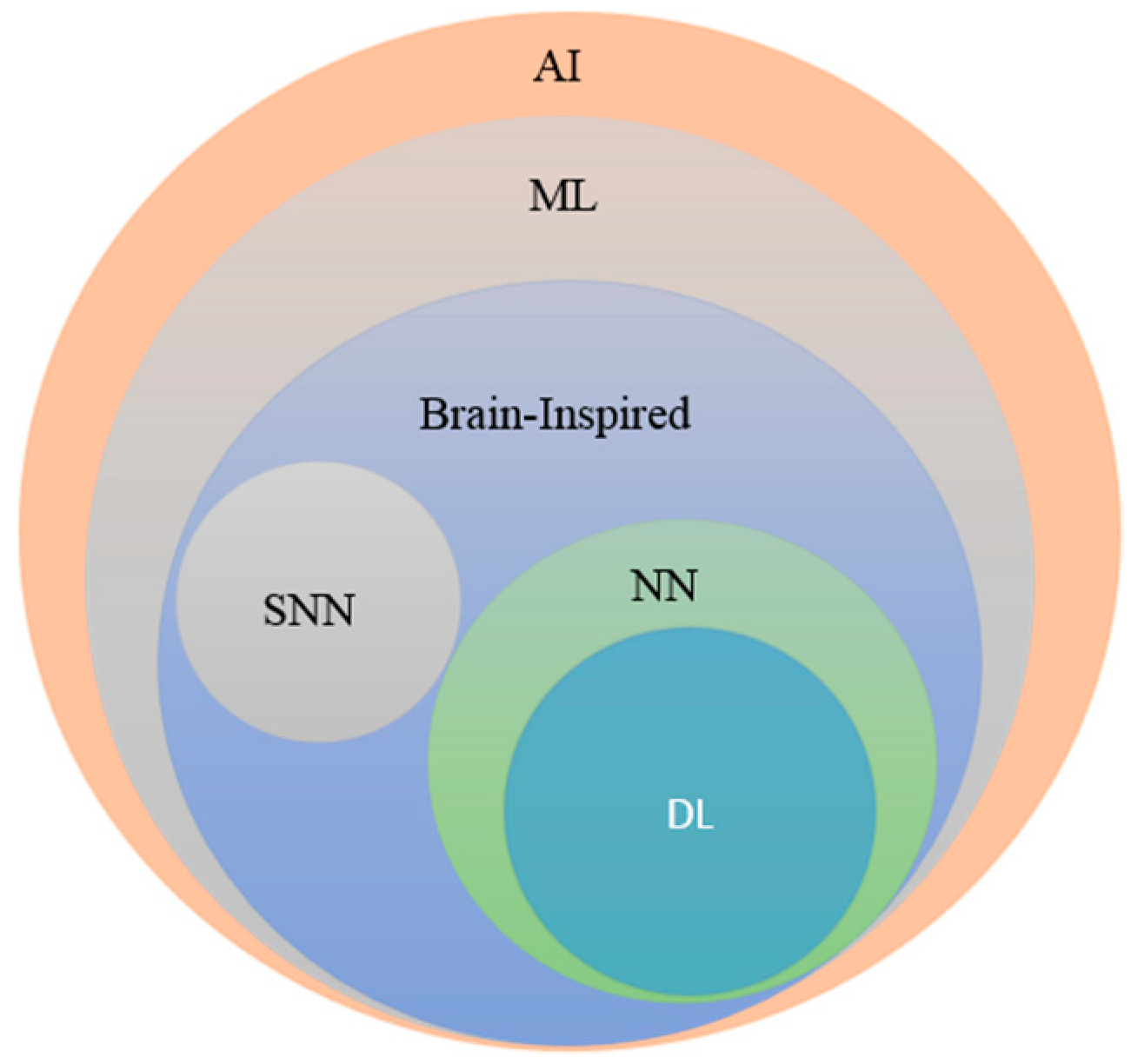

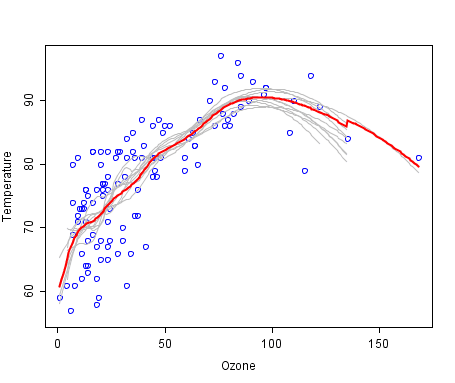

The notion of multiple kernel learning is presented and a discussion on sparse modeling for nonparametric models in the context of additive models is provided. The concept of random Fourier features for kernel approximation is introduced and its application to online and distributed learning is discussed. This submodule contains functions that approximate the feature mappings that correspond to certain kernels as they are used for example in support vector machines see Support Vector Machines.

The following feature functions perform non-linear transformations of the input which can serve as a basis for linear. Google Scholar Cross Ref. Kernel Approximation scikit-learn 0242 documentation.

In this thesis we focus on applying kernel methods to supervised learning in machine learning and uncertainty quantification of learning algorithms. They yield a new random Fourier features algorithm for approximating Gaussian and Cauchy rational quadratic kernel matrices. In contrast to approaches such as 22 and 23 which replace the conventional neural.

Scaling reinforcement learning toward robocup soccer. Another kernel approximation method is the RBFsampler. Essentially kernel approximation is equivalent to learning an approximated subspace in the high-dimensional feature vector space induced and characterized by the kernel function.

The kernel method maps input data into a high-dimensional feature space and computes the similarity in the feature space without computing the co-ordinates of data in that space. For low-dimensional data our method uses a. In this section we consider how to compose the nonlinear mappings in-duced by kernel functions.

Were then going to again create an instance of our class initiate in that class of RBFsampler calling it rbfSample and for the RBFsampler RBF is going to be the only kernel that can be used. The computational com-plexity of the kernel method is determined by the size of data regardless of the dimension of the feature space. Baseline model Step 6 Evaluate the model Step 7 Construct the Kernel.

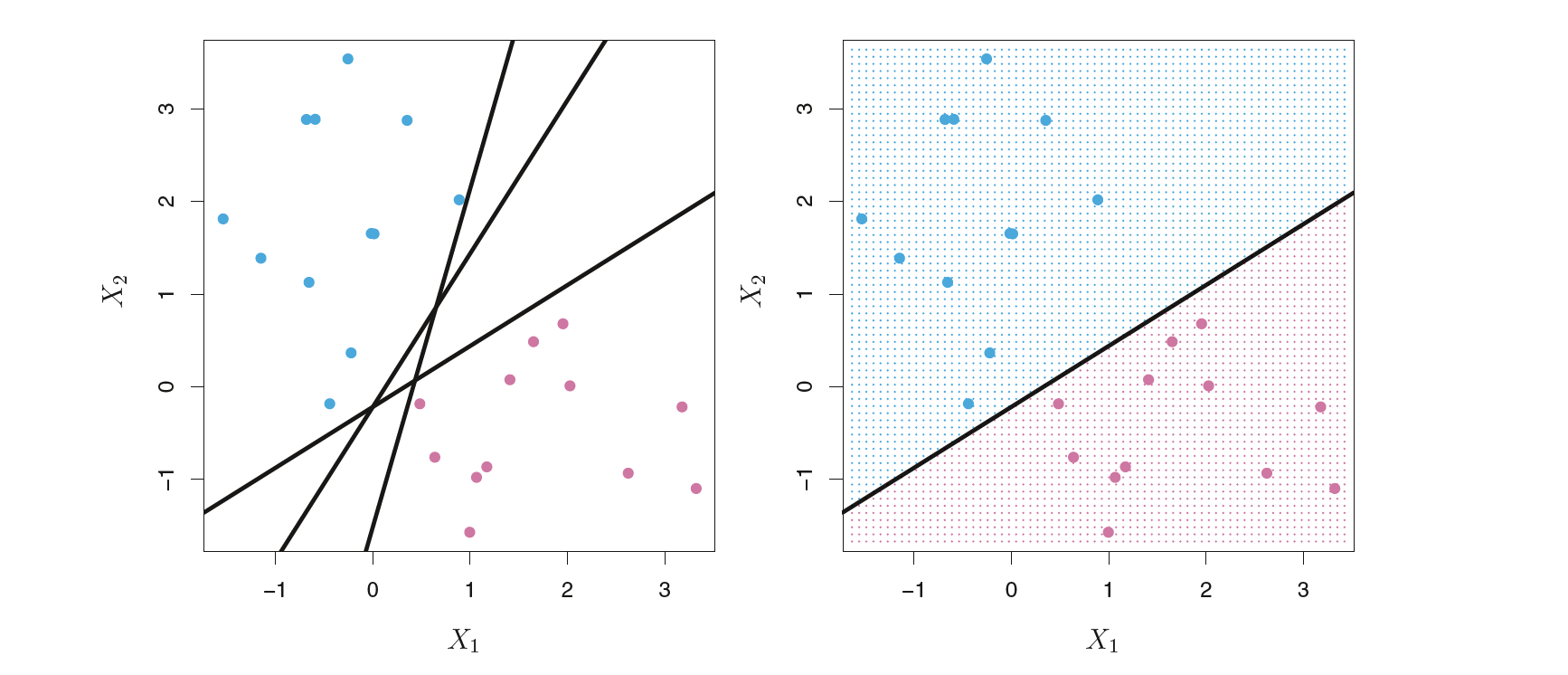

The kernel computes the inner product in the induced feature space. You will proceed as follow before you train and evaluate the model. A kernel function can be viewed as inducing a nonlinear mapping from inputs x to fea-ture vectors Φx.

As a result kernels are underutilized as tools for solving supervised learning problems on large data and measuring. Bounds are motivated by two important applications in machine learning. The objective of this conference is to provide a forum for scholars and students to meet and.

Besides another kernel approximation technique used in our approach is the well-known Nystr om method Williams and Seeger 2000 which has been widely ap-plied in machine learning tasks including Gaussian Processes Williams and Seeger 2000 Kernelized SVMs Zhang et al 2012 Kernel PCA Spectral Clustering Zhang and Kwok. While existing kernel approximation techniques make kernel learning efficient utilizing deep networks enables end-to-end inference with a task-specific objective. 2016b proposed to distribute the model in dual space including the original feature space and the random feature space that approximates the rst space.

Kernel approximation excessive number of random features is required which could lead to a serious computational issue. Step 1 Import the libraries Step 2 Import the data Step 3 Prepare the data Step 4 Construct the input_fn Step 5 Construct the logistic model. Samples from pω ωjM j1.

On a kernel-based method for pattern recognition regression approximation and operator inversion. Deep learning based solution to kernel machine optimization for both single and multiple kernel cases. In Proceedings of the Eighteenth International Conference on Machine Learning ICML01 Williams MA 2001.

In this scenario kernel methods are one of the main tools in the machine learning toolbox but it lags behind others due to its inefficiency on dealing with large data. Estimate the kernel with KxyφxT φy as in 3. To recap using the approximation of kernels with random features works as follows.

Kernel approximation is commonly employed to resolve this issue. With streaming data acquisition approximated subspaces can be constructed adaptively.

Circle Fitting With Qr Decomposition Machine Learning Coding Data

Rise Of The Machines Advances In Deep Learning For Cancer Diagnosis Trends In Cancer

Electronics Free Full Text A Survey On Machine Learning Based Performance Improvement Of Wireless Networks Phy Mac And Network Layer Html

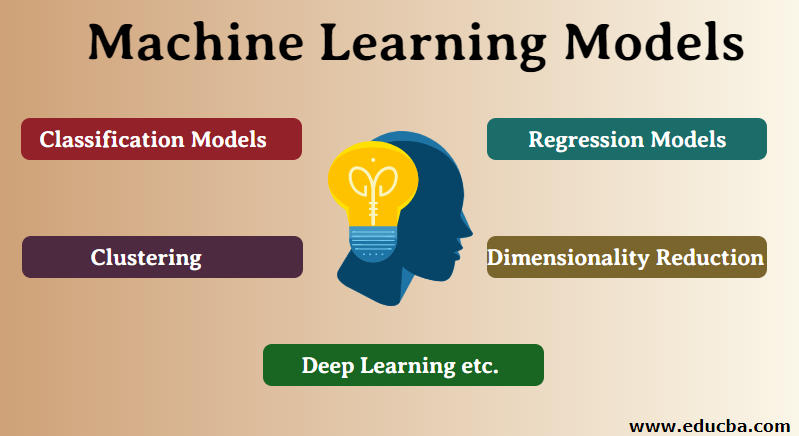

Machine Learning Models Top 5 Amazing Models Of Machine Learning

Quantum Machine Learning Is The Next Big Thing

Electronics Free Full Text A State Of The Art Survey On Deep Learning Theory And Architectures Html

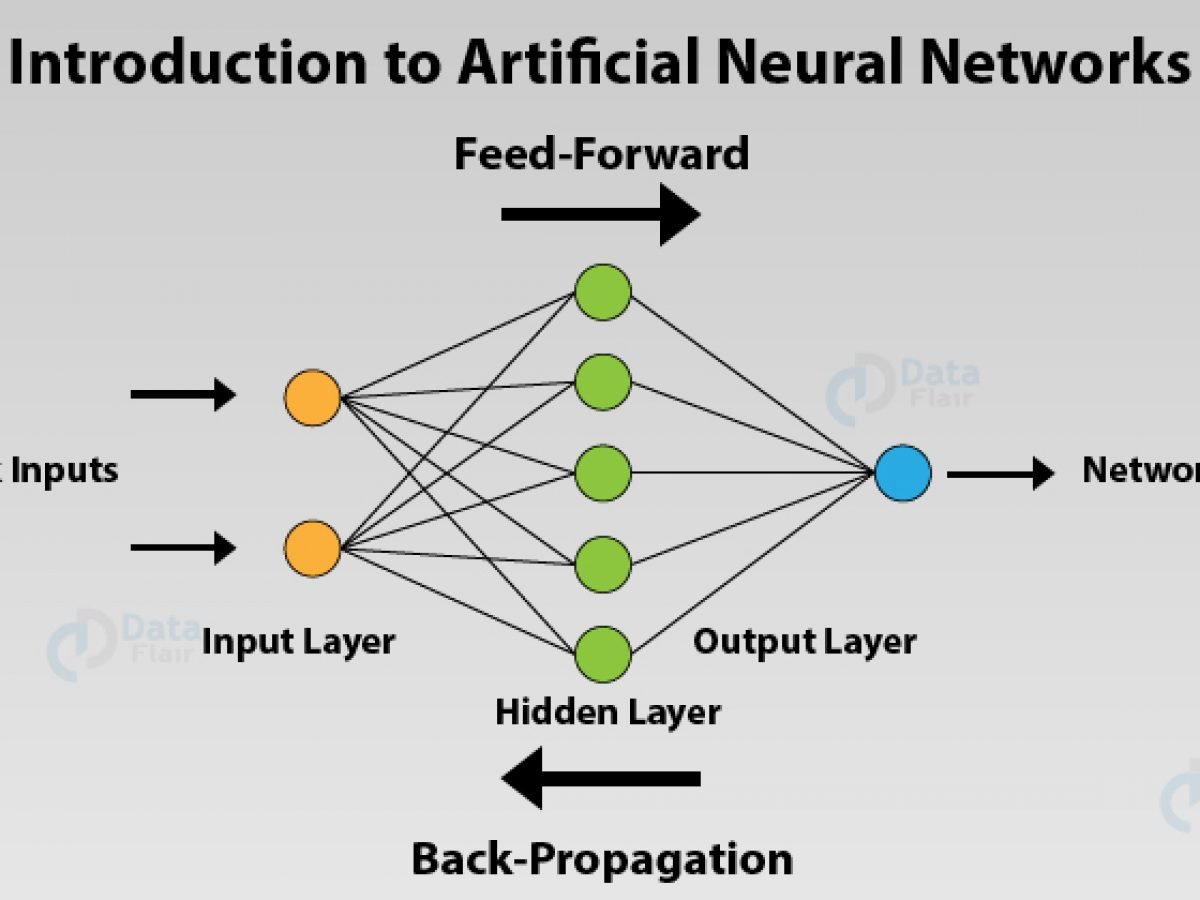

Artificial Neural Networks For Machine Learning Every Aspect You Need To Know About Dataflair

Wave Physics As An Analog Recurrent Neural Network Science Advances Physics Machine Learning Models Data Science

Integrating Machine Learning With Human Knowledge Sciencedirect

Uniformly Accurate Machine Learning Based Hydrodynamic Models For Kinetic Equations Pnas

A Complete Machine Learning Guide In Python Part 2 Machine Learning Learning New Details

What Is The Kernel Trick Why Is It Important By Grace Zhang Medium

Move Over Neural Networks A New Method For Cosmological Inference Astrobites Cosmic Web Machine Learning Methods Inference

What S New In Machine Learning Deep Learning Matlab Simulink

A Tour Of Machine Learning Algorithms

Tinyml When Small Iot Devices Call For Compressed Machine Learning News Machine Learning Iot Learning Technology

Downloadable Cheat Sheets For Ai Neural Networks Machine Learning Deep Learning Data Science Data Science Deep Learning Machine Learning Deep Learning

Kernels Introduction Practical Machine Learning Tutorial With Python P 29 Youtube

Post a Comment for "Kernel Approximation Machine Learning"